MongoDB has grown from a basic JSON key-value store to one of the most popular NoSQL database solutions in use today. It is widely supported and provides flexible JSON document storage at scale. It also provides native querying and analytics capabilities. These attributes have caused MongoDB to be widely adopted especially alongside JavaScript web applications.

As capable as it is, there are still instances where MongoDB alone can’t satisfy all of the requirements for an application, so getting a copy of the data into another platform via a change data capture (CDC) solution is required. This can be used to create data lakes, populate data warehouses or for specific use cases like offloading analytics and text search.

In this post, we’ll walk through how CDC works on MongoDB and how it can be implemented, and then delve into the reasons why you might want to implement CDC with MongoDB.

Bifurcation vs Polling vs Change Data Capture

Change data capture is a mechanism that can be used to move data from one data repository to another. There are other options:

- You can bifurcate data coming in, splitting the data into multiple streams that can be sent to multiple data sources. Generally, this means your applications would submit new data to a queue. This is not a great option because it limits the APIs that your application can use to submit data to be those that resemble a queue. Applications tend to need the support of higher level APIs for things like ACID transactions. So, this means we generally want to allow our application to talk directly to a database. The application could submit data via a micro-service or application server that talks directly to the database, but this only moves the problem. These services would still need to talk directly to the database.

- You could periodically poll your front end database and push data into your analytical platform. While this sounds simple, the details get tricky, particularly if you need to support updates to your data. It turns out this is hard to do in practice. And you have now introduced another process that has to run, be monitored, scale etc.

So, using CDC avoids these problems. The application can still leverage the database features (maybe via a service) and you don’t have to set up a polling infrastructure. But there is another key difference — using CDC will give you the freshest version of the data. CDC enables true real-time analytics on your application data, assuming the platform you send the data to can consume the events in real time.

Options For Change Data Capture on MongoDB

Apache Kafka

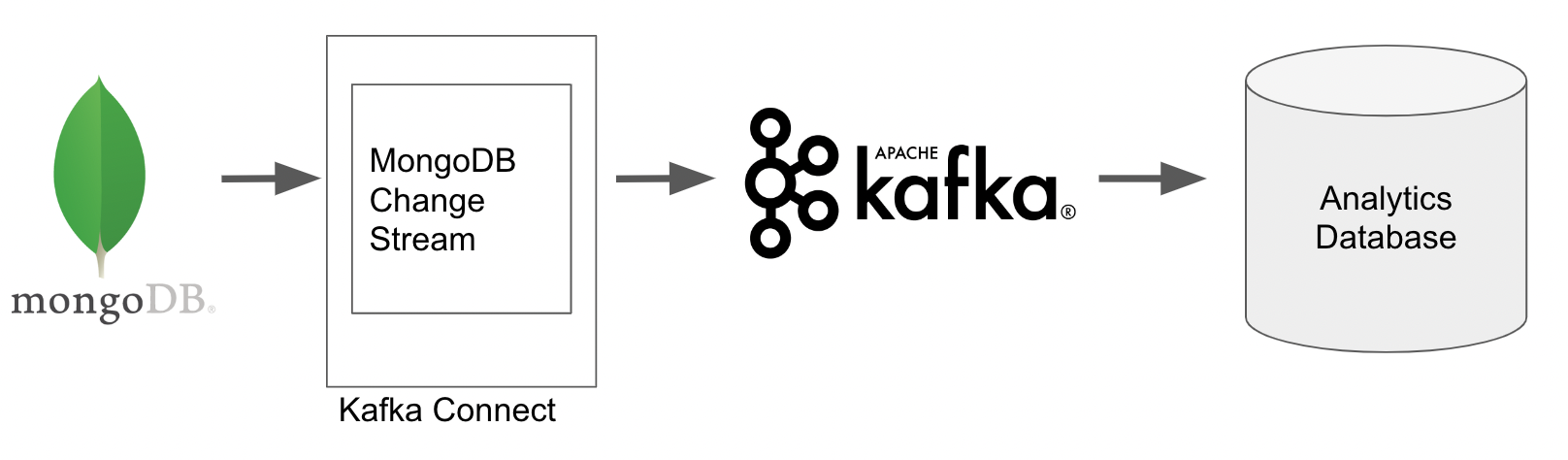

The native CDC architecture for capturing change events in MongoDB uses Apache Kafka. MongoDB provides Kafka source and sink connectors that can be used to write the change events to a Kafka topic and then output those changes to another system such as a database or data lake.

The out-of-the-box connectors make it fairly simple to set up the CDC solution, however they do require the use of a Kafka cluster. If this is not already part of your architecture then it may add another layer of complexity and cost.

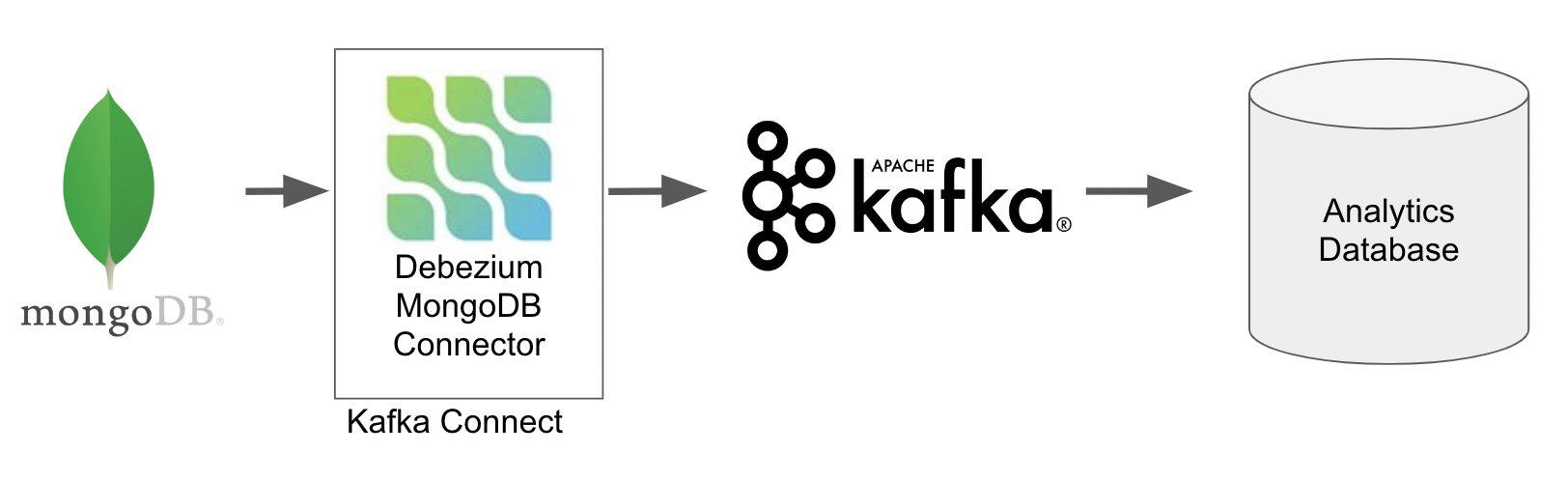

Debezium

It is also possible to capture MongoDB change data capture events using Debezium. If you are familiar with Debezium, this can be trivial.

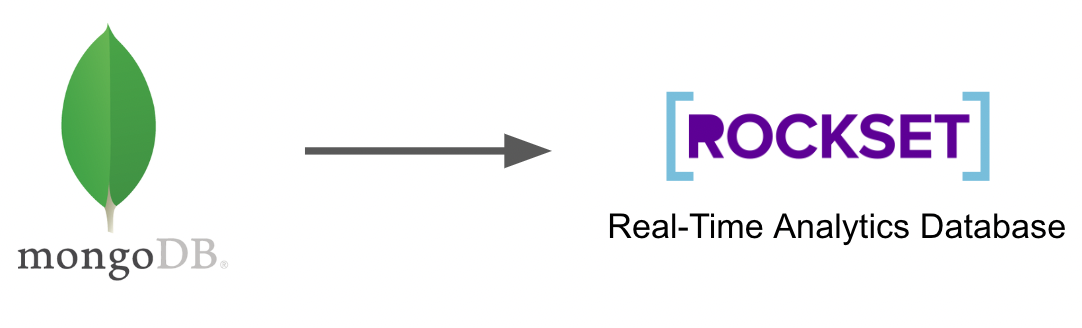

MongoDB Change Streams and Rockset

If your goal is to execute real-time analytics or text search, then Rockset’s out-of-the-box connector that leverages MongoDB change streams is a good choice. The Rockset solution requires neither Kafka nor Debezium. Rockset captures change events directly from MongoDB, writes them to its analytics database, and automatically indexes the data for fast analytics and search.

Your choice to use Kafka, Debezium or a fully integrated solution like Rockset will depend on your use case, so let’s take a look at some use cases for CDC on MongoDB.

Use Cases for CDC on MongoDB

Offloading Analytics

One of the main use cases for CDC on MongoDB is to offload analytical queries. MongoDB has native analytical capabilities allowing you to build up complex transformation and aggregation pipelines to be executed on the documents. However, these analytical pipelines, due to their rich functionality, are cumbersome to write as they use a proprietary query language specific to MongoDB. This means analysts who are used to using SQL will have a steep learning curve for this new language.

Documents in MongoDB can also have complex structures. Data is stored as JSON documents that can contain nested objects and arrays that all provide further intricacies when building up analytical queries on the data such as accessing nested properties and exploding arrays to analyze individual elements.

Finally, performing large analytical queries on a production front end instance can negatively impact user experience, especially if the analytics is being run frequently. This could significantly slow down read and write speeds that developers often want to avoid, especially as MongoDB is often chosen particularly for its fast write and read operations. Alternatively, it would require larger and larger MongoDB machines and clusters, increasing cost.

To overcome these challenges, it is common to send data to an analytical platform via CDC so that queries can be run using familiar languages such as SQL without affecting performance of the front-end system. Kafka or Debezium can be used to extract the changes and then write them to a suitable analytics platform, whether this is a data lake, data warehouse or a real-time analytics database.

Rockset takes this a step further by not only directly consuming CDC events from MongoDB, but also supporting SQL queries natively (including JOINs) on the documents, and provides functionality to manipulate complex data structures and arrays, all within SQL queries. This enables real-time analytics because the need to transform and manipulate the documents before queries is eliminated.

Search Options on MongoDB

Another compelling use case for CDC on MongoDB is to facilitate text searches. Again, MongoDB has implemented features such as text indexes that support this natively. Text indexes allow certain properties to be indexed specifically for search applications. This means documents can be retrieved based on proximity matching and not just exact matches. You can also include multiple properties in the index such as a product name and a description, so both are used to determine whether a document matches a particular search term.

While this is powerful, there may still be some instances where offloading to a dedicated database for search might be preferable. Again, performance will be the main reason especially if fast writes are important. Adding text indexes to a collection in MongoDB will naturally add an overhead on every insertion due to the indexing process.

If your use case dictates a richer set of search capabilities, such as fuzzy matching, then you may want to implement a CDC pipeline to copy the required text data from MongoDB into Elasticsearch. However, Rockset is still an option if you are happy with proximity matching, want to offload search queries, and also retain all of the real-time analytics benefits discussed previously. Rockset’s search capability is also SQL based, which again might reduce the burden of producing search queries as both Elasticsearch and MongoDB use bespoke languages.

Conclusion

MongoDB is a scalable and powerful NoSQL database that provides a lot of functionality out of the box including fast read (get by primary key) and write speeds, JSON document manipulation, aggregation pipelines and text search. Even with all this, a CDC solution may still enable greater capabilities and/or reduce costs, depending on your specific use case. Most notably, you might want to implement CDC on MongoDB to reduce the burden on production instances by offloading load intensive tasks, such as real-time analytics, to another platform.

MongoDB provides Kafka and Debezium connectors out of the box to aid with CDC implementations; however, depending on your existing architecture, this may mean implementing new infrastructure on top of maintaining a separate database for storing the data.

Rockset skips the requirement for Kafka and Debezium with its inbuilt connector, based on MongoDB change streams, reducing the latency of data ingestion and allowing real-time analytics. With automatic indexing and the ability to query structured or semi-structured natively with SQL, you can write powerful queries on data without the overhead of ETL pipelines, meaning queries can be executed on CDC data within one to two seconds of it being produced.

Lewis Gavin has been a data engineer for five years and has also been blogging about skills within the Data community for four years on a personal blog and Medium. During his computer science degree, he worked for the Airbus Helicopter team in Munich enhancing simulator software for military helicopters. He then went on to work for Capgemini where he helped the UK government move into the world of Big Data. He is currently using this experience to help transform the data landscape at easyfundraising.org.uk, an online charity cashback site, where he is helping to shape their data warehousing and reporting capability from the ground up.