Whatnot is a venture-backed e-commerce startup built for the streaming age. We’ve built a live video marketplace for collectors, fashion enthusiasts, and superfans that allows sellers to go live and sell anything they’d like through our video auction platform. Think eBay meets Twitch.

Coveted collectibles were the first items on our livestream when we launched in 2020. Today, through live shopping videos, sellers offer products in more than 100 categories, from Pokemon and baseball cards to sneakers, antique coins and much more.

Crucial to Whatnot’s success is connecting communities of buyers and sellers through our platform. It gathers signals in real-time from our audience: the videos they are watching, the comments and social interactions they are leaving, and the products they are buying. We analyze this data to rank the most popular and relevant videos, which we then present to users in the home screen of Whatnot’s mobile app or website.

However, to maintain and increase our growth, we needed to take our home feed to the next level: ranking our show suggestions to each user based on the most interesting and relevant content in real time.

This would require an increase in the amount and variety of data we would need to ingest and analyze, all of it in real time. To support this, we sought a platform where data science and machine learning professionals could iterate quickly and deploy to production faster while maintaining low-latency, high-concurrency workloads.

High Cost of Running Elasticsearch

On the surface, our legacy data pipeline appeared to be performing well and built upon the most modern of components. This included AWS-hosted Elasticsearch to do the retrieval and ranking of content using batch features loaded on ingestion. This process returns a single query in tens of milliseconds, with concurrency rates topping out at 50-100 queries per second.

However, we have plans to grow usage 5-10x in the next year. This would be through a combination of expanding into much-larger product categories, and boosting the intelligence of our recommendation engine.

The bigger pain point was the high operational overhead of Elasticsearch for our small team. This was draining productivity and severely limiting our ability to improve the intelligence of our recommendation engine to keep up with our growth.

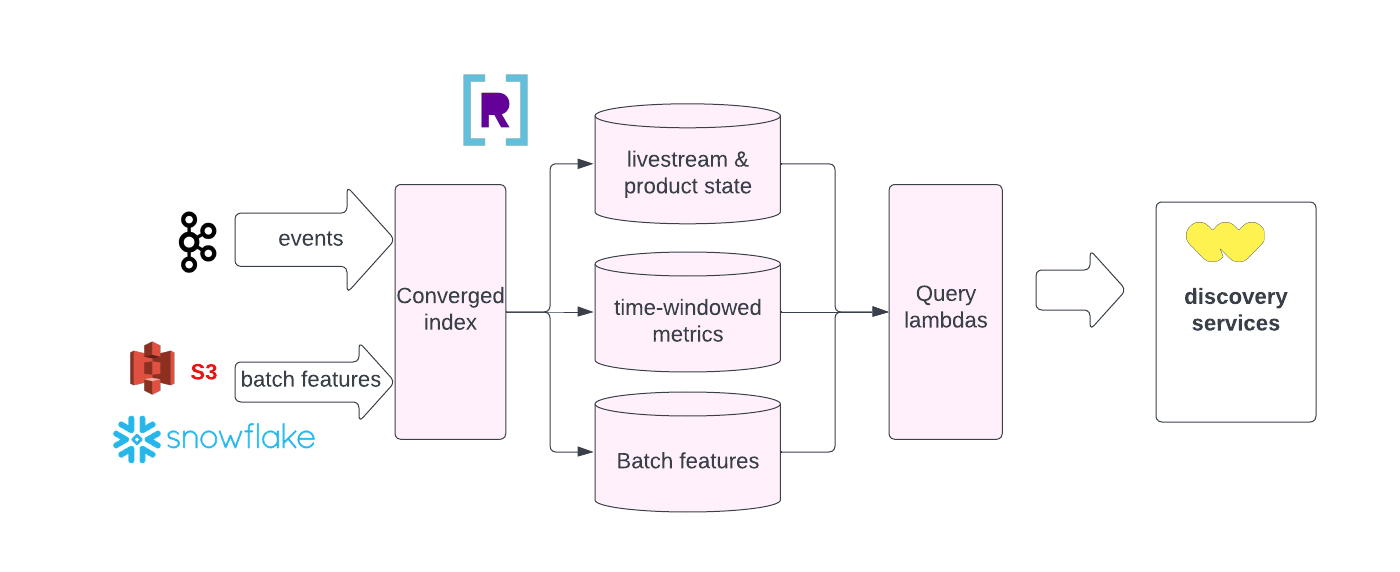

Say we wanted to add a new user signal to our analytics pipeline. Using our previous serving infrastructure, the data would have to be sent through Confluent-hosted instances of Apache Kafka and ksqlDB and then denormalized and/or rolled up. Then, a specific Elasticsearch index would have to be manually adjusted or built for that data. Only then could we query the data. The entire process took weeks.

Just maintaining our existing queries was also a huge effort. Our data changes frequently, so we were constantly upserting new data into existing tables. That required a time-consuming update to the relevant Elasticsearch index every time. And after every Elasticsearch index was created or updated, we had to manually test and update every other component in our data pipeline to make sure we had not created bottlenecks, introduced data errors, etc.

Solving for Efficiency, Performance, and Scalability

Our new real-time analytics platform would be core to our growth strategy, so we carefully evaluated many options.

We designed a data pipeline using Airflow to pull data from Snowflake and push it into one of our OLTP databases that serves the Elasticsearch-powered feed, optionally with a cache in front. It was possible to schedule this job to run on 5, 10, 20 minute intervals, but with the additional latency we were unable to meet our SLAs, while the technical complexity reduced our desired developer velocity.

So we evaluated many real-time alternatives to Elasticsearch, including Rockset, Materialize, Apache Druid and Apache Pinot. Every one of these SQL-first platforms met our requirements, but we were looking for a partner that could take on the operational overhead as well.

In the end, we deployed Rockset over these other options because it had the best blend of features to underpin our growth: a fully-managed, developer-enhancing platform with real-time ingestion and query speeds, high concurrency and automatic scalability.

Let’s look at our highest priority, developer productivity, which Rockset turbocharges in several ways. With Rockset’s Converged Index™ feature, all fields, including nested ones, are indexed, which ensures that queries are automatically optimized, running fast no matter the type of query or the structure of the data. We no longer have to worry about the time and labor of building and maintaining indexes, as we had to with Elasticsearch. Rockset also makes SQL a first-class citizen, which is great for our data scientists and machine learning engineers. It offers a full menu of SQL commands, including four kinds of joins, searches and aggregations. Such complex analytics were harder to perform using Elasticsearch.

With Rockset, we have a much faster development workflow. When we need to add a new user signal or data source to our ranking engine, we can join this new dataset without having to denormalize it first. If the feature is working as intended and the performance is good, we can finalize it and put it into production within days. If the latency is high, then we can consider denormalizing the data or do some precalcuations in KSQL first. Either way, this slashes our time-to-ship from weeks to days.

Rockset’s fully-managed SaaS platform is mature and a first mover in the space. Take how Rockset decouples storage from compute. This gives Rockset instant, automatic scalability to handle our growing, albeit spiky traffic (such as when a popular product or streamer comes online). Upserting data is also a breeze due to Rockset’s mutable architecture and Write API, which also makes inserts, updates and deletes simple.

As for performance, Rockset also delivered true real-time ingestion and queries, with sub-50 millisecond end-to-end latency. That didn’t just match Elasticsearch, but did so at much lower operational effort and cost, while handling a much higher volume and variety of data, and enabling more complex analytics – all in SQL.

It’s not just the Rockset product that’s been great. The Rockset engineering team has been a fantastic partner. Whenever we had an issue, we messaged them in Slack and got an answer quickly. It’s not the typical vendor relationship – they have truly been an extension of our team.

A Plethora of Other Real-Time Uses

We are so happy with Rockset that we plan to expand its usage in many areas. Two slam dunks would be community trust and safety, such as monitoring comments and chat for offensive language, where Rockset is already helping customers.

We also want to use Rockset as a mini-OLAP database to provide real-time reports and dashboards to our sellers. Rockset would serve as a real-time alternative to Snowflake, and it would be even more convenient and easy to use. For instance, upserting new data through the Rockset API is instantly reindexed and ready for queries.

We are also seriously looking into making Rockset our real-time feature store for machine learning. Rockset would be perfect to be part of a machine learning pipeline feeding real time features such as the count of chats in the last 20 minutes in a stream. Data would stream from Kafka into a Rockset Query Lambda sharing the same logic as our batch dbt transformations on top of Snowflake. Ideally one day we would abstract the transformations to be used in Rockset and Snowflake dbt pipelines for composability and repeatability. Data scientists know SQL, which Rockset strongly supports.

Rockset is in our sweet spot now. Of course, in a perfect world that revolved around Whatnot, Rockset would add features especially for us, such as stream processing, approximate nearest neighbors search, auto-scaling to name a few. We still have some use cases where real-time joins aren’t enough, forcing us to do some pre-calculations. If we could get all of that in a single platform rather than having to deploy a heterogenous stack, we would love it.

Learn more about how we build real-time signals in our user Home Feed. And visit the Whatnot career page to see the openings on our engineering team.