This post is the foreword composed by Brad Smith for Microsoft’s report Governing AI: A Plan for the Future The very first part of the report information 5 methods federal governments ought to think about policies, laws, and policies around AI. The 2nd part concentrates on Microsoft’s internal dedication to ethical AI, demonstrating how the business is both operationalizing and constructing a culture of accountable AI.

” Do not ask what computer systems can do, ask what they ought to do.”

That is the title of the chapter on AI and principles in a book I co— authored in 2019. At the time, we composed that, “This might be among the specifying concerns of our generation.” 4 years later on, the concern has actually taken spotlight not simply on the planet’s capitals, however around numerous table.

As individuals have actually utilized or become aware of the power of OpenAI’s GPT-4 structure design, they have actually typically been shocked or perhaps shocked. Numerous have actually been enthused or perhaps delighted. Some have actually been worried or perhaps scared. What has actually ended up being clear to practically everybody is something we kept in mind 4 years earlier– we are the very first generation in the history of humankind to develop makers that can make choices that formerly might just be made by individuals.

Nations all over the world are asking typical concerns. How can we utilize this brand-new innovation to resolve our issues? How do we prevent or handle brand-new issues it might develop? How do we manage innovation that is so effective?

These concerns call not just for broad and thoughtful discussion, however definitive and efficient action. This paper uses a few of our concepts and recommendations as a business.

These recommendations develop on the lessons we have actually been discovering based upon the work we have actually been providing for a number of years. Microsoft CEO Satya Nadella set us on a clear course when he composed in 2016 that, “Possibly the most efficient argument we can have isn’t among excellent versus evil: The argument ought to have to do with the worths instilled in individuals and organizations developing this innovation.”

Because that time, we have actually specified, released, and carried out ethical concepts to assist our work. And we have actually developed out continuously enhancing engineering and governance systems to put these concepts into practice. Today, we have almost 350 individuals dealing with accountable AI at Microsoft, assisting us carry out finest practices for constructing safe, safe, and transparent AI systems created to benefit society.

New chances to enhance the human condition

The resulting advances in our method have actually provided us the ability and self-confidence to see ever-expanding methods for AI to enhance individuals’s lives. We have actually seen AI conserve people’ vision, make development on brand-new remedies for cancer, produce brand-new insights about proteins, and supply forecasts to secure individuals from dangerous weather condition. Other developments are warding off cyberattacks and assisting to secure essential human rights, even in countries affected by foreign intrusion or civil war.

Daily activities will benefit too. By serving as a copilot in individuals’s lives, the power of structure designs like GPT-4 is turning search into a more effective tool for research study and enhancing performance for individuals at work. And, for any moms and dad who has actually had a hard time to bear in mind how to assist their 13-year-old kid through an algebra research task, AI-based support is a handy tutor.

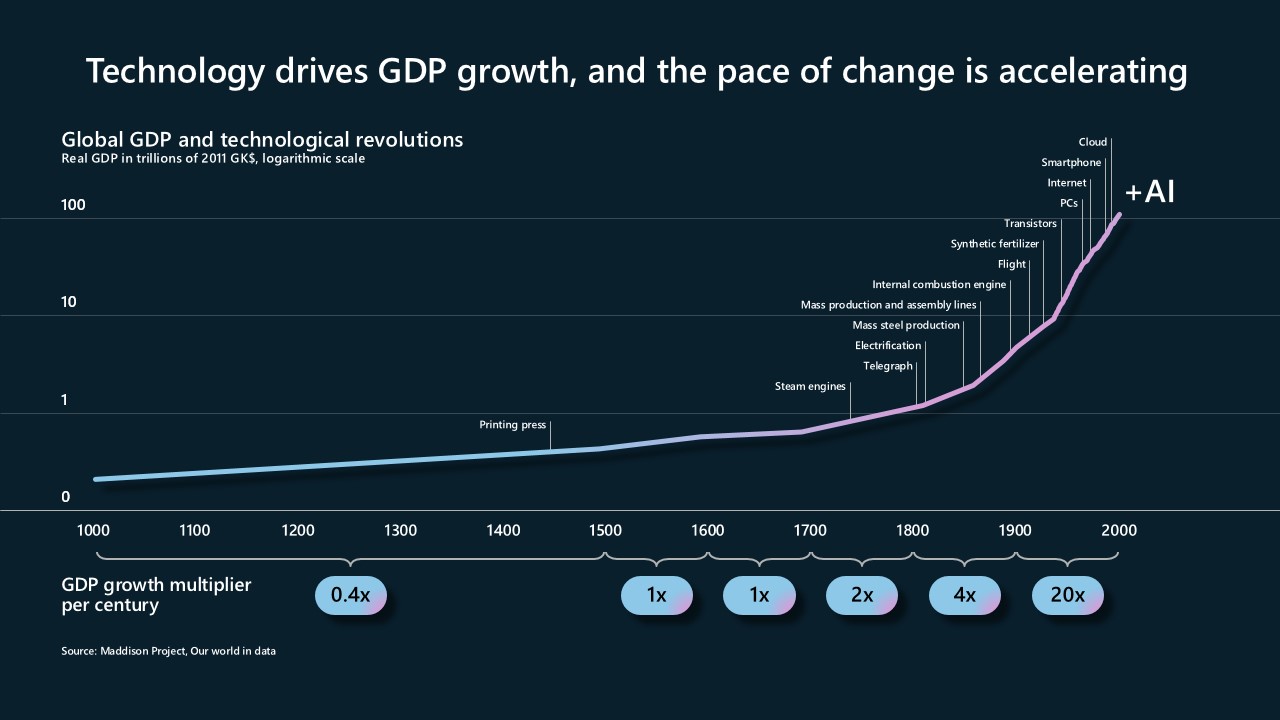

In numerous methods, AI uses possibly much more possible for the good of humankind than any creation that has actually preceded it. Considering that the creation of the printing press with movable enter the 1400s, human success has actually been growing at a speeding up rate. Creations like the steam engine, electrical power, the car, the plane, computing, and the web have actually offered a lot of the foundation for contemporary civilization. And, like the printing press itself, AI uses a brand-new tool to truly assist advance human knowing and idea.

Guardrail s for the future

Another conclusion is similarly crucial: It’s inadequate to focus just on the numerous chances to utilize AI to enhance individuals’s lives. This is possibly among the most crucial lessons from the function of social networks. Little bit more than a years earlier, technologists and political analysts alike gushed about the function of social networks in spreading out democracy throughout the Arab Spring. Yet, 5 years after that, we discovered that social networks, thus numerous other innovations prior to it, would end up being both a weapon and a tool– in this case focused on democracy itself.

Today we are ten years older and better, and we require to put that knowledge to work. We require to believe early on and in a clear-eyed method about the issues that might lie ahead. As innovation progresses, it’s simply as crucial to guarantee appropriate control over AI as it is to pursue its advantages. We are dedicated and figured out as a business to establish and release AI in a safe and accountable method. We likewise acknowledge, nevertheless, that the guardrails required for AI need a broadly shared sense of duty and ought to not be delegated innovation business alone.

When we at Microsoft embraced our 6 ethical concepts for AI in 2018, we kept in mind that a person concept was the bedrock for whatever else– responsibility. This is the essential requirement: to guarantee that makers stay based on efficient oversight by individuals, and individuals who create and run makers stay liable to everybody else. In other words, we need to constantly guarantee that AI stays under human control This need to be a first-order top priority for innovation business and federal governments alike.

This links straight with another necessary idea. In a democratic society, among our fundamental concepts is that no individual is above the law. No federal government is above the law. No business is above the law, and no item or innovation ought to be above the law. This results in an important conclusion: Individuals who create and run AI systems can not be liable unless their choices and actions go through the guideline of law.

In numerous methods, this is at the heart of the unfolding AI policy and regulative argument. How do federal governments finest guarantee that AI undergoes the guideline of law? In other words, what type should brand-new law, guideline, and policy take?

A five-point plan for the general public governance of AI

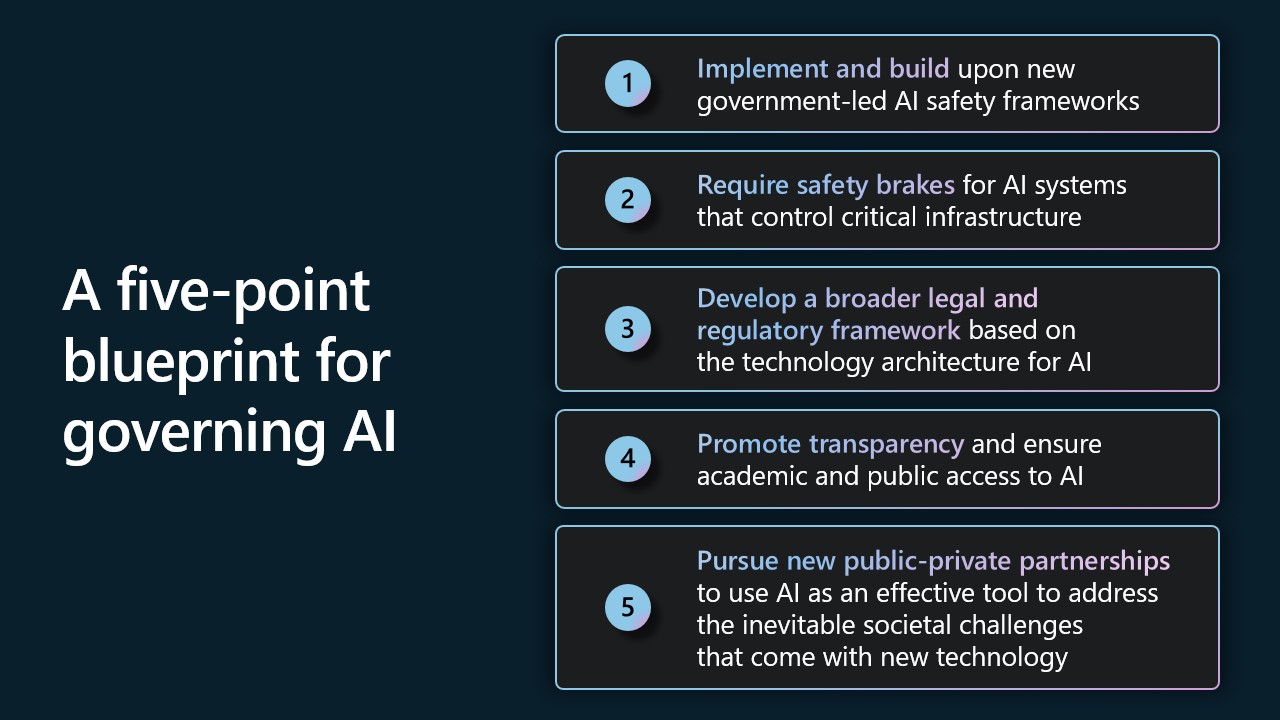

Area Among this paper uses a five-point plan to resolve a number of existing and emerging AI problems through public law, law, and guideline. We provide this acknowledging that every part of this plan will gain from more comprehensive conversation and need much deeper advancement. However we hope this can contribute constructively to the work ahead.

Initially, carry out and build on brand-new government-led AI security structures. The very best method to be successful is typically to develop on the successes and excellent concepts of others. Particularly when one wishes to move rapidly. In this circumstances, there is a crucial chance to develop on work finished simply 4 months earlier by the U.S. National Institute of Standards and Innovation, or NIST. Part of the Department of Commerce, NIST has actually finished and introduced a brand-new AI Danger Management Structure.

We provide 4 concrete recommendations to carry out and build on this structure, consisting of dedications Microsoft is making in reaction to a current White Home conference with leading AI business. We likewise think the administration and other federal governments can speed up momentum through procurement guidelines based upon this structure.

2nd, need efficient security brakes for AI systems that manage important facilities In some quarters, thoughtful people progressively are asking whether we can adequately manage AI as it ends up being more effective. Issues are often postured concerning AI control of important facilities like the electrical grid, water supply, and city traffic streams.

This is the correct time to discuss this concern. This plan proposes brand-new security requirements that, in result, would develop security brakes for AI systems that manage the operation of designated important facilities. These foolproof systems would become part of a detailed method to system security that would keep efficient human oversight, durability, and effectiveness top of mind. In spirit, they would resemble the braking systems engineers have actually long developed into other innovations such as elevators, school buses, and high-speed trains, to securely handle not simply daily situations, however emergency situations too.

In this method, the federal government would specify the class of high-risk AI systems that manage important facilities and warrant such precaution as part of a detailed method to system management. New laws would need operators of these systems to develop security brakes into high-risk AI systems by style. The federal government would then guarantee that operators test high-risk systems frequently to guarantee that the system precaution work. And AI systems that manage the operation of designated important facilities would be released just in certified AI datacenters that would guarantee a 2nd layer of security through the capability to use these security brakes, therefore guaranteeing efficient human control.

Third, establish a broad legal and regulative structure based upon the innovation architecture for AI Our company believe there will require to be a legal and regulative architecture for AI that shows the innovation architecture for AI itself. In other words, the law will require to put numerous regulative duties upon various stars based upon their function in handling various elements of AI innovation.

For this factor, this plan consists of info about a few of the important pieces that enter into structure and utilizing brand-new generative AI designs. Utilizing this as context, it proposes that various laws put particular regulative duties on the companies working out particular duties at 3 layers of the innovation stack: the applications layer, the design layer, and the facilities layer.

This ought to initially use existing legal defenses at the applications layer to using AI. This is the layer where the security and rights of individuals will most be affected, particularly since the effect of AI can differ noticeably in various innovation situations. In numerous locations, we do not require brand-new laws and policies. We rather require to use and implement existing laws and policies, assisting firms and courts establish the proficiency required to adjust to brand-new AI situations.

There will then be a requirement to establish brand-new law and guideline s for extremely capable AI structure designs, finest carried out by a brand-new federal government company. This will affect 2 layers of the innovation stack. The very first will need brand-new policies and licensing for these designs themselves. And the second will include responsibilities for the AI facilities operators on which these designs are established and released. The plan that follows deals recommended objectives and methods for each of these layers.

In doing so, this plan integrates in part on a concept established in current years in banking to secure versus cash laundering and criminal or terrorist usage of monetary services. The “Know Your Client”– or KYC– concept needs that banks validate client identities, develop danger profiles, and display deals to assist discover suspicious activity. It would make good sense to take this concept and use a KY3C method that develops in the AI context particular responsibilities to understand one’s cloud, one’s consumers, and one’s material

In the very first circumstances, the designers of designated, effective AI designs initially “understand the cloud” on which their designs are established and released. In addition, such as for situations that include delicate usages, the business that has a direct relationship with a consumer– whether it be the design designer, application service provider, or cloud operator on which the design is running– ought to “understand the consumers” that are accessing it.

Likewise, the general public ought to be empowered to “understand the material” that AI is developing through using a label or other mark notifying individuals when something like a video or audio file has actually been produced by an AI design instead of a person. This labeling responsibility ought to likewise secure the general public from the change of initial material and the development of “deep phonies.” This will need the advancement of brand-new laws, and there will be numerous crucial concerns and information to resolve. However the health of democracy and future of civic discourse will gain from thoughtful procedures to discourage using brand-new innovation to trick or defraud the general public.

4th, promote openness and guarantee scholastic and not-for-profit access to AI. Our company believe an important public objective is to advance openness and expand access to AI resources. While there are some crucial stress in between openness and the requirement for security, there exist numerous chances to make AI systems more transparent in an accountable method. That’s why Microsoft is dedicating to a yearly AI openness report and other actions to broaden openness for our AI services.

We likewise think it is important to broaden access to AI resources for scholastic research study and the not-for-profit neighborhood. Standard research study, particularly at universities, has actually been of essential significance to the financial and tactical success of the United States given that the 1940s. However unless scholastic scientists can get access to considerably more computing resources, there is a genuine danger that clinical and technological questions will suffer, consisting of associating with AI itself. Our plan requires brand-new actions, consisting of actions we will take across Microsoft, to resolve these concerns.

5th, pursue brand-new public-private collaborations to utilize AI as an efficient tool to resolve the unavoidable social difficulties that included brand-new innovation. One lesson from current years is what democratic societies can achieve when they harness the power of innovation and bring the general public and economic sectors together. It’s a lesson we require to build on to resolve the effect of AI on society.

We will all gain from a strong dosage of clear-eyed optimism. AI is a remarkable tool. However, like other innovations, it too can end up being an effective weapon, and there will be some all over the world who will look for to utilize it that method. However we ought to take some heart from the cyber front and the last year-and-a-half in the war in Ukraine. What we discovered is that when the general public and economic sectors collaborate, when similar allies come together, and when we establish innovation and utilize it as a guard, it’s more effective than any sword in the world.

Crucial work is required now to utilize AI to secure democracy and essential rights, supply broad access to the AI abilities that will promote inclusive development, and utilize the power of AI to advance the world’s sustainability requirements. Possibly more than anything, a wave of brand-new AI innovation supplies an event for believing huge and acting boldly. In each location, the secret to success will be to establish concrete efforts and bring federal governments, appreciated business, and energetic NGOs together to advance them. We provide some preliminary concepts in this report, and we anticipate doing a lot more in the months and years ahead.

Govern ing AI within Microsoft

Eventually, every company that develops or utilizes innovative AI systems will require to establish and execute its own governance systems. Area 2 of this paper explains the AI governance system within Microsoft– where we started, where we are today, and how we are moving into the future.

As this area acknowledges, the advancement of a brand-new governance system for brand-new innovation is a journey in and of itself. A years earlier, this field hardly existed. Today, Microsoft has practically 350 staff members focusing on it, and we are purchasing our next to grow this even more.

As explained in this area, over the previous 6 years we have actually developed out a more detailed AI governance structure and system throughout Microsoft. We didn’t go back to square one, obtaining rather from finest practices for the security of cybersecurity, personal privacy, and digital security. This is all part of the business’s detailed business danger management (ERM) system, which has actually ended up being an important part of the management of corporations and numerous other companies on the planet today.

When it concerns AI, we initially established ethical concepts and after that needed to equate these into more particular business policies. We’re now on variation 2 of the business requirement that embodies these concepts and specifies more exact practices for our engineering groups to follow. We have actually carried out the requirement through training, tooling, and screening systems that continue to develop quickly. This is supported by extra governance procedures that consist of tracking, auditing, and compliance procedures.

Just like whatever in life, one gains from experience. When it concerns AI governance, a few of our essential knowing has actually originated from the in-depth work needed to examine particular delicate AI usage cases. In 2019, we established a delicate usage evaluation program to subject our most delicate and unique AI usage cases to extensive, customized evaluation that leads to customized assistance. Because that time, we have actually finished approximately 600 delicate usage case evaluations. The rate of this activity has actually accelerated to match the rate of AI advances, with practically 150 such evaluations happening in the 11 months.

All of this develops on the work we have actually done and will continue to do to advance accountable AI through business culture. That implies employing brand-new and varied skill to grow our accountable AI environment and purchasing the skill we currently have at Microsoft to establish abilities and empower them to believe broadly about the possible effect of AI systems on people and society. It likewise implies that a lot more than in the past, the frontier of innovation needs a multidisciplinary method that integrates terrific engineers with skilled experts from throughout the liberal arts.

All this is used in this paper in the spirit that we’re on a cumulative journey to create an accountable future for expert system. We can all gain from each other. And no matter how excellent we might believe something is today, we will all require to keep improving.

As technological modification speeds up, the work to govern AI properly needs to equal it. With the best dedications and financial investments, our company believe it can.