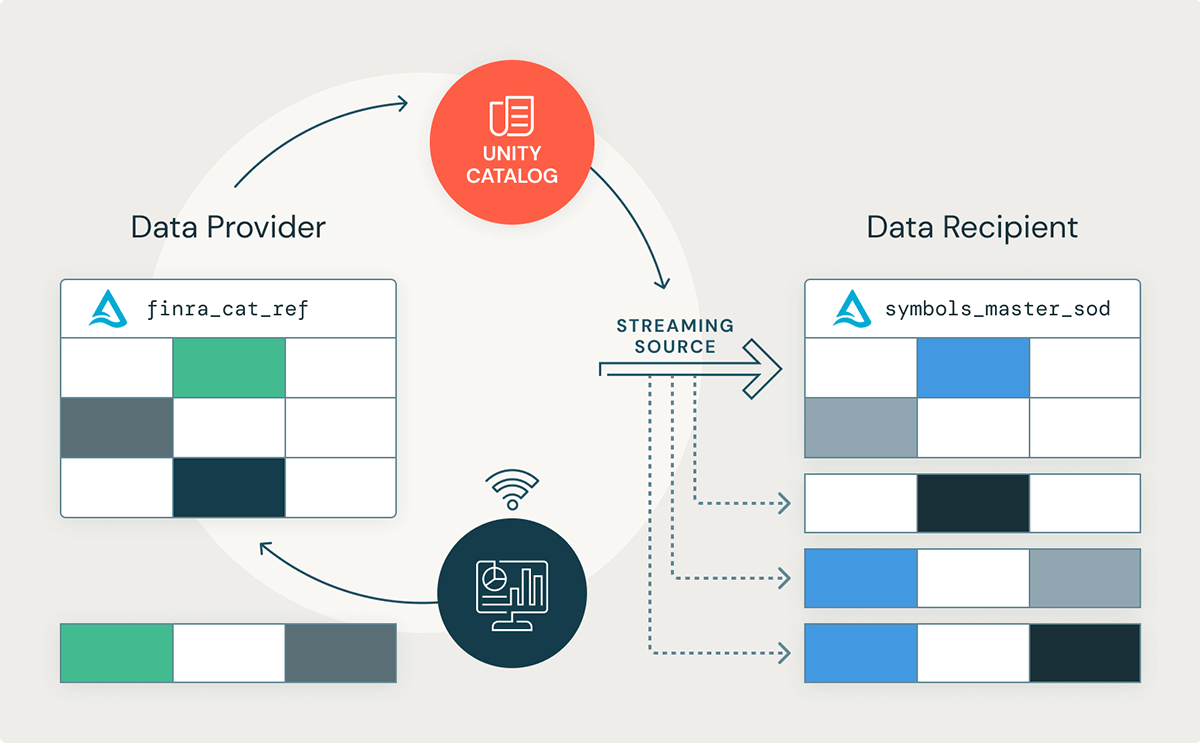

We are thrilled to reveal that assistance for utilizing Structured Streaming with Delta Sharing is now normally readily available (GA) in Azure, AWS, and GCP! This brand-new function will enable information receivers on the Databricks Lakehouse Platform to stream modifications from a Delta Table shared through the Unity Brochure

Information suppliers can take advantage of this ability to scale their data-as-a-service quickly, decrease the functional expense of sharing big information sets, enhance information quality with instant information recognition and quality checks as brand-new information gets here, and enhance client service with real-time information shipment. Likewise, information receivers can stream the most recent modifications from a shared dataset, decreasing the facilities expense of processing big batch information and setting the structure for cutting-edge, real-time information applications. Information receivers throughout numerous market verticals can take advantage of this brand-new function, for instance:

- Retail: Information experts can stream the most recent sales figures for a seasonal style line and present company insights in the kind of a BI report.

- Health Life Sciences: Health professionals can stream electrocardiogram readings into an ML design to determine problems.

- Production: Structure management groups can stream wise thermostat readings and determine what time of day or night heating and cooling systems must effectively switch on or off.

Frequently, information groups trust information pipelines performed in a batch style to process their information due to the reality that batch execution is both robust and simple to execute. Nevertheless, today, companies require the most recent getting here information to make real-time company choices. Structured streaming not just streamlines real-time processing however likewise streamlines batch processing by decreasing the variety of batch tasks to simply a couple of streaming tasks. Transforming batch information pipelines to streaming is insignificant as Structured Streaming supports the very same DataFrame API.

In this blog site post, we’ll check out how business can take advantage of Structured Streaming with Delta Sharing to make the most of business worth of their information in near real-time utilizing an example in the monetary market. We’ll likewise take a look at how other complementary functions, like Databricks Workflows, can be utilized in combination with Delta Sharing and Unity Brochure to construct a real-time information application.

Assistance for Structured Streaming

Possibly the most extremely prepared for Delta Sharing function over the previous couple of months has actually been included assistance for utilizing a shared Delta Table as a source in Structured Streaming This brand-new function will enable information receivers to construct real-time applications utilizing Delta Tables shared through Unity Brochure on the Databricks Lakehouse Platform.

How to Utilize Delta Showing Structured Streaming

Let’s take a more detailed take a look at how an information recipient may stream openly traded stock sign details for real-time trading insights. This post will utilize the FINRA Feline Reportable Equity Securities Sign Master dataset, which notes all stocks and equity securities traded throughout the U.S. National Market System (NMS). Structured Streaming can be utilized to construct real-time applications, however it can likewise work in circumstances where information gets here less regularly. For an easy note pad presentation, we’ll utilize a dataset that is upgraded 3 times throughout the day – as soon as at the start of the deal date (SOD), a 2nd time throughout the day to show any intraday modifications, and a 3rd time at the end of the deal date (EOD). There are no updates released on weekends or on U.S. vacations.

| Released File | Set Up |

|---|---|

| Feline Reportable Equity Securities Sign Master– SOD | 6:00 a.m. EST |

| Feline Reportable Alternatives Securities Sign Master– SOD | 6:00 a.m. EST |

| Member ID (IMID) List | 6:00 a.m. EST |

| Member ID (IMID) Disputes List | 6:00 a.m. EST |

| Feline Reportable Equity Securities Sign Master– Intraday | 10:30 a.m. EST, and around every 2 hours up until EOD file is released |

| Feline Reportable Alternatives Securities Sign Master– Intraday | 10:30 a.m. EST, and around every 2 hours up until EOD file is released |

| Feline Reportable Equity Securities Sign Master– EOD | 8 p.m. EST |

| Feline Reportable Alternatives Securities Sign Master– EOD | 8 p.m. EST |

Table 1.1 – The FINRA feline sign and member recommendation information is released throughout business day. There are no updates released on weekends or on U.S. vacations.

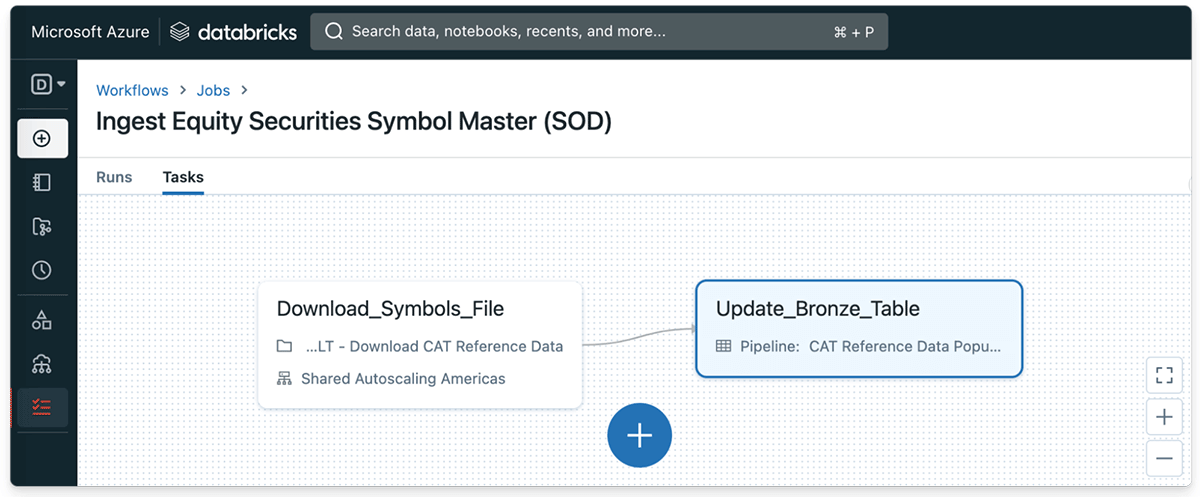

From Information Supplier’s Point of view: Consuming the feline Data utilizing Databricks Workflows

Among the significant advantages of the Databricks Lakehouse Platform is that it makes continually streaming modifications into a Delta Table exceptionally simple. We’ll initially begin by specifying an easy Python job that downloads the FINRA feline equity securities sign file at the start of the deal date (SOD). Later, we’ll conserve the released file to a momentary directory site on the Databricks filesystem.

# Initially, we'll download the FINRA feline Equity Securities Symbols declare today's Start of Day

demand = requests.get( catReferenceDataURL, stream = Real, allow_redirects = Real).

# Next, conserve the released file to a temperature directory site on the Databricks filesystem

with open( dbfsPath, " wb") as binary_file:.

for portion in request.iter _ material( chunk_size = 2048):.

if portion:.

binary_file. compose( portion).

binary_file. flush().

Code 1.1. – A basic Python job can download the FINRA feline equity sign file at the start of the trading day.

To show, we’ll likewise specify a function that will consume the raw file and continually upgrade a bronze table in our Delta Lake each time an upgraded file is released.

# Lastly, we'll consume the most recent equity signs CSV file into a "bronze" Delta table

def load_CAT_reference_data():

return (.

spark.read.option(" header", " real").

schema( catEquitySymbolsMasterSchema).

choice(" delimiter", "|").

format(" csv").

load( localFilePath).

withColumn(" catReferenceDataType", lit(" FINRACATReportableEquitySecurities_SOD")).

withColumn(" currentDate", current_date()).

withColumn(" currentTimestamp", current_timestamp()).

withColumn(" compositeKey", concat_ws(".", " sign", " listingExchange")).

).

Code. 1.2 – The FINRA feline equity sign information is consumed into a Delta Table at the start of each trading day.

Once it is begun, the Databricks Workflow will start occupying the feline equity signs dataset each time the file is released at the start of the trading day.

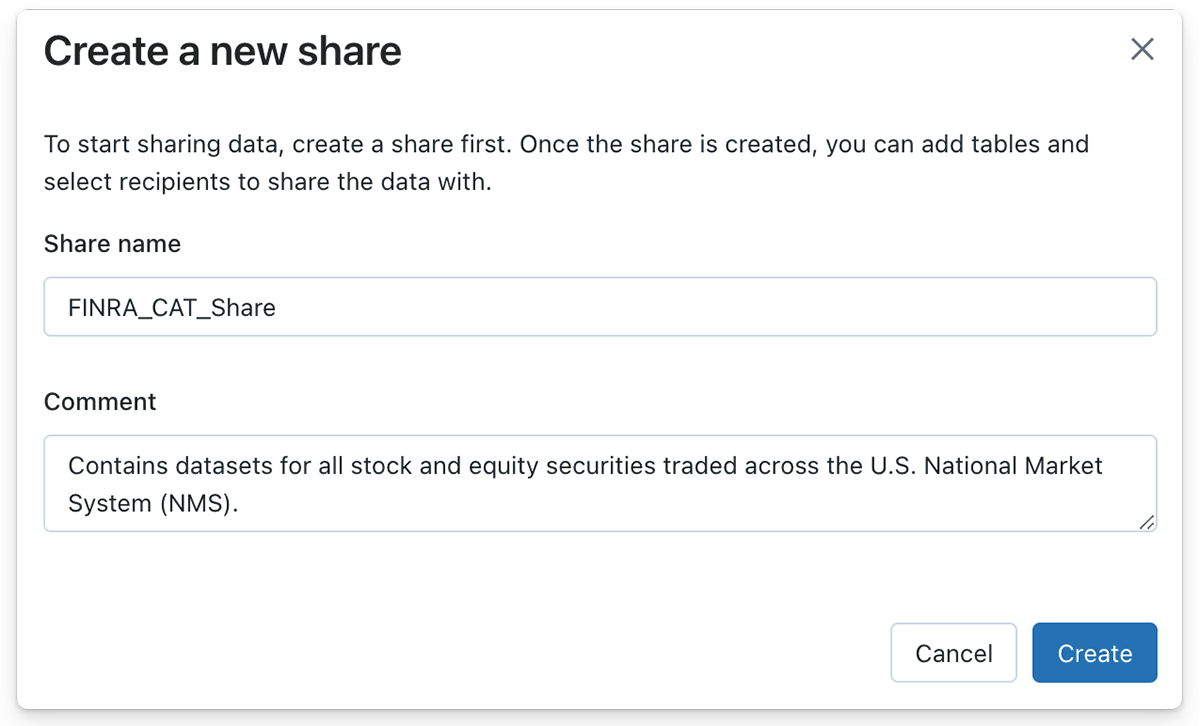

From Information Supplier’s Point of view: Sharing a Delta Table as a Streaming Source

Now that we have actually produced a streaming pipeline to consume updates to the sign file each trading day, we can take advantage of Delta Sharing to share the Delta Table with information receivers. Producing a Delta Share on the Databricks Lakehouse Platform can be finished with simply a couple of clicks of the button or with a single SQL declaration if SQL syntax is chosen.

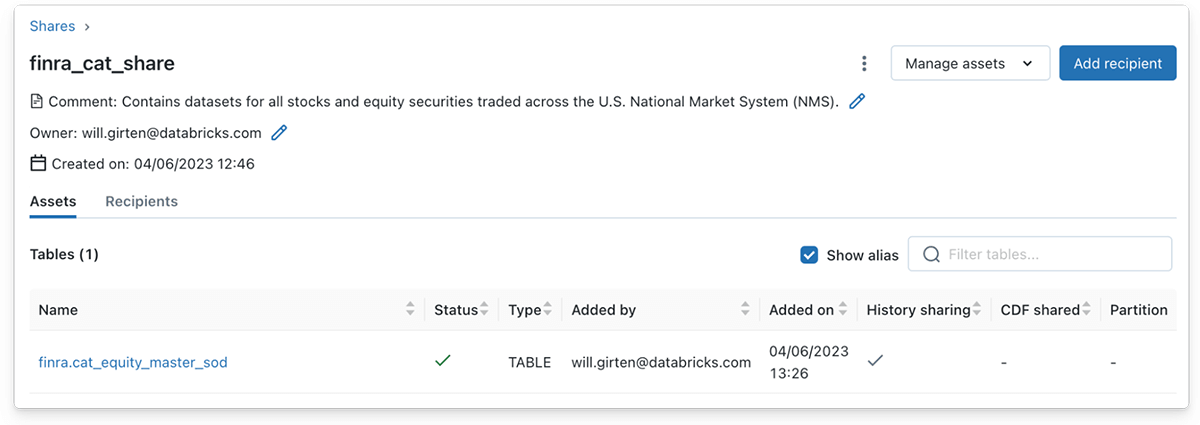

Likewise, an information supplier can occupy a Delta Show several tables by clicking the ‘ Manage possessions‘ button, followed by the ‘ Edit tables‘ button. In this case, the bronze Delta Table consisting of the equity sign information is contributed to the Share item.

Keep in mind that the complete history of a Delta table should be shared to support checks out utilizing Structured Streaming. History sharing is made it possible for by default utilizing the Databricks UI to include a Delta table to a Share. Nevertheless, history sharing should be clearly defined when utilizing the SQL syntax.

/ **.

A Delta table should be shown history in order to support.

Glow Structured Stream checks out.

*/

ALTER SHARE finra_cat_share.

ADD TABLE finance_catalog. finra.symbols _ master.

WITH HISTORY;.

Code 1.4 – The history of a Delta table should be clearly shared to support Structured Streaming checks out when utilizing the SQL syntax.

From Information Receiver’s Point of view: Streaming a Shared Delta Table

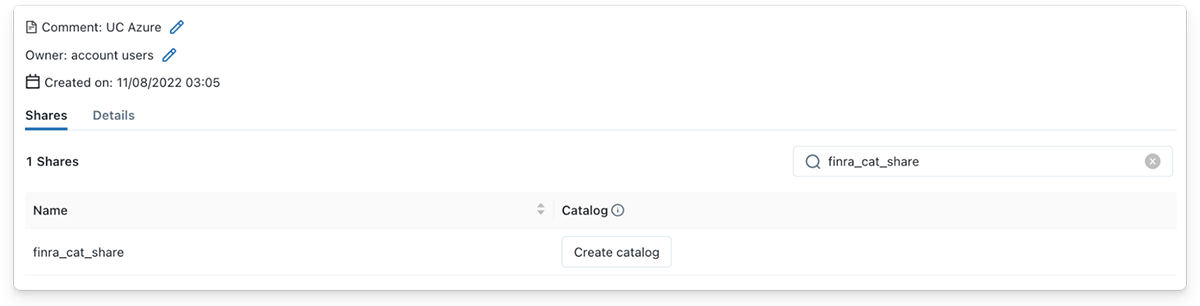

As an information recipient, streaming from a shared Delta table is simply as easy! After the Delta Share has actually been shown an information recipient, the recipient will instantly see the Share appear under the supplier information in Unity Brochure. Consequently, the information recipient can produce a brand-new brochure in Unity Brochure by clicking the ‘ Develop brochure‘ button, offering a significant name, and including an optional remark to explain the Share contents.

Information receivers can stream from a Delta Table shared through Unity Brochure utilizing Databricks Runtime 12.1 or higher. In this example, we have actually utilized a Databricks cluster with Databricks 12.2 LTS Runtime set up. An information recipient can check out the shared Delta table as a Glow Structured Stream utilizing the deltaSharing information source and providing the name of the shared table.

# Stream from the shared Delta table that's been produced with a brand-new Brochure in Unity Brochure

equity_master_stream = (spark.readStream.

format(' deltaSharing').

table(' finra_cat_catalog. finra.cat _ equity_master')).

equity_master_stream. display screen().

Code 1.4 – An information recipient can stream from a shared Delta Table utilizing the deltaSharing information source.

As an additional example, let’s integrate the shared feline equity signs master dataset with a stock rate history dataset, regional to the information recipient’s Unity Brochure. We’ll start by specifying an energy function for getting the weekly stock rate histories of a provided stock ticker sign.

import yfinance as yf.

import pyspark.sql.functions as F.

def get_weekly_stock_prices( sign: str):

""" Scrapes the stock rate history of a ticker sign over the last 1 week.

arguments:.

sign (String) - The target stock sign, generally a 3-4 letter abbreviation.

returns:.

(Glow DataFrame) - The present rate of the supplied ticker sign.

"""

ticker = yf.Ticker( sign).

# Recover the last documented stock rate in the recently

current_stock_price = ticker.history( duration =" 1wk").

# Transform to a Glow DataFrame

df = spark.createDataFrame( current_stock_price).

# Select just columns pertinent to stock rate and include an occasion processing timestamp

event_ts = str( current_stock_price. index[0]).

df = (df.withColumn(" Event_Ts", F.lit( event_ts)).

withColumn(" Sign", F.lit( sign)).

choose(.

F.col(" Sign"), F.col(" Open"), F.col(" High"), F.col(" Low"), F.col(" Close"),.

F.col(" Volume"), F.col(" Event_Ts"). cast(" timestamp")).

).

# Return the most recent rate details

return df.

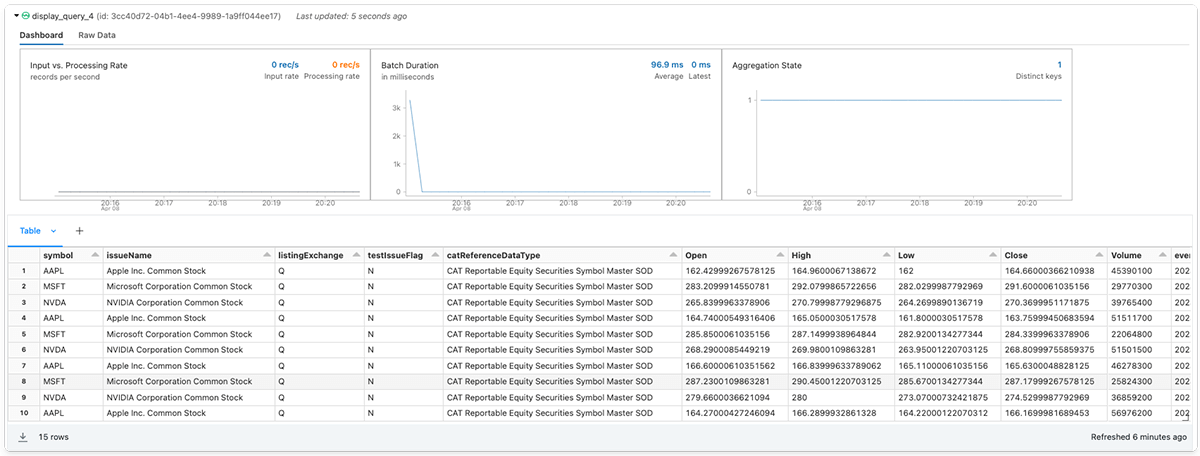

Next, we’ll collaborate the equity stock master information stream with the regional stock rate histories of 3 big tech stocks – Apple Inc. (AAPL), the Microsoft Corporation (MSFT), and the Invidia Corporation (NVDA).

# Get the weekly rate histories for 3 significant tech stocks

aapl_stock_prices = get_weekly_stock_prices(' AAPL').

msft_stock_prices = get_weekly_stock_prices(' MSFT').

nvidia_stock_prices = get_weekly_stock_prices(' NVDA').

all_stock_prices = aapl_stock_prices. union( msft_stock_prices). union( nvidia_stock_prices).

# Sign up with the stock rate histories with the equity signs master stream

symbols_master = spark.readStream. format(' deltaSharing'). table(' finra_catalog. finra.cat _ equity_master').

( symbols_master. sign up with( all_stock_prices, on =" sign", how =" inner").

choose(" sign", " issueName", " listingExchange", " testIssueFlag", " catReferenceDataType",.

" Open", " High", " Low", " Close", " Volume", " event_ts").

). display screen().

Lastly, the information recipient can include an optional location sink and begin the streaming question.

Starting with Delta Sharing on Databricks

I hope you enjoyed this example of how companies can take advantage of Delta Sharing to make the most of business worth of their information in near real-time.

Wish to get going with Delta Sharing however do not understand where to begin? If you currently are a Databricks client, follow the guide to get going utilizing Delta Sharing ( AWS| Azure| GCP). Check out the documents to find out more about the setup alternatives consisted of in with function. If you are not an existing Databricks client, register for a totally free trial with a Premium or Business work area.

Credits

We wish to extend unique thanks for all of the contributions to this release, consisting of Abhijit Chakankar, Lin Zhou, and Shixiong Zhu.